On Demand Webinars

Copilot Partners: Unlock your opportunities

Our latest On-Demand webinar provides a practical, execution focused walk through the full Copilot partner journey, from early adoption to advanced scenarios.

The rapid advancement and availability of AI technologies have meant companies have been keen to jump on the bandwagon to adopt and capitalize on them. However, it has been demonstrated that Generative AI can throw up ‘untrustworthy’ results which can be plain wrong and biased. Such outcomes can harm reputations, generate bad press and break the trust of investors and consumers.

Governments around the world are keen to set up guidelines for responsible AI. In the UK, for example, it has created an Office for AI which is responsible for encouraging safe and innovative Use of AI. Elsewhere there is a joint US-EU initiative to draft a set of voluntary rules for businesses, called the “AI Code of Conduct”, in line with their Joint Roadmap for Trustworthy AI and Risk Management.

In APAC, Singapore has been instrumental in spearheading responsible AI adoption. Also, Indonesia and Thailand have made strides in government AI readiness, echoing their recent implementation of national AI strategies. Japan’s new AI guidelines are a big step towards responsible AI use, stressing the importance of avoiding biased data and promoting transparency. Nations such as New Zealand and Australia, South Korea, India, and others are drawing up governance and ethics guidelines, some voluntary, and some are taking steps towards legislation. In December 2023, the EU published its AI Act: deal on comprehensive rules for trustworthy AI1. More recently in Australia, the Federal Government issued an open edict that it will set mandatory guard-rails in place for the use of AI in business and industry, which could include outright prohibition of ‘high-risk’ AIs.

Across the international community, governments and businesses are striving towards trustworthy AI at both macro and micro levels. Developers, technology service providers and systems integrators in the APAC region should anticipate accelerated regulatory review of development practices, adoption, implementation and use of AI in business and industry.

For many of our partners, the initial foray into AI may start with Generative AI and Large Language Models. In our previous post, we explored the concepts of Fair AI and responsible use of AI. In this update, we dive into Trustworthy AI, what it comprises and Crayon’s blueprint for ethical and explainable Large Language Models (LLMs).

Let’s look at how trustworthy AI relates to, and differs from Fair AI, Responsible AI and Safe AI.

Fair AI: This is a subset of trustworthy AI, specifically addressing the need for AI to be unbiased and to treat all users equitably. While fairness is a critical aspect, trustworthy AI also requires the system to be reliable, lawful, ethical, and robust.

Responsible AI: Responsible AI is a broader category that includes fairness but also extends to ethical obligations, societal impacts, and ensures that AI acts in people’s best interests. Trustworthy AI encompasses all these aspects of responsible AI but also implies a level of trust from users that the AI system will behave as expected in a wide range of scenarios and over time.

Safe AI: Safety is another essential facet of trustworthy AI, emphasizing the need for AI systems to operate without causing unintended harm. Trustworthy AI takes this further by not only ensuring that the AI doesn’t cause harm but is dependable, maintaining data privacy, and is secure against attacks or failures.

While fair, responsible, and safe AI are components that contribute to the trustworthiness of an AI system, trustworthy AI is the overarching goal, ensuring that AI systems are designed and deployed in a manner that earns the confidence of users and the general public and is worthy of that trust.

Trustworthy AI can be considered an umbrella term that implies an AI system is:

We want to ensure that AI systems are worthy of our trust and here at Crayon we underscore the importance of trustworthy AI by integrating ethical considerations into the development and deployment of LLM-based solutions in all AI projects we deliver.

The concerns surrounding large language models (LLMs) as “black boxes” with opaque decision-making processes are well-founded, considering their intricate architectures. Nonetheless, advancements in the field lead to increased explainability and control over these models.

A case in point is Crayon’s approach. Crayon conducts research in AI explainability. By adopting emerging methods and tools, insights into LLM behaviour are enhanced. For instance, techniques analogous to those used in medical diagnosis tools for identifying influential image parts could be adapted to pinpoint text segments in LLMs affecting certain responses.

The ability of LLMs like ChatGPT to self-explain can be exploited to comprehend the reasoning behind their responses. For instance, in sentiment analysis, LLMs might identify and enumerate the sentiment-charged words shaping their conclusions, providing a transparent layer, and facilitating comparison with traditional methods like LIME saliency maps.

Rigorous assessment of these self-explanations against established methods (e.g. SHAP, LIME) validates their efficacy. Metrics are employed to gauge the faithfulness and intelligibility of these explanations. And, acknowledging the dynamic nature of AI systems, including LLMs, Crayon commits to perpetual monitoring and enhancement of explainability features, revisiting current interpretability practices in response to LLM advancements.

We embrace user-centric design and believe it is imperative to ensure that explanations are accessible and comprehensible, catering to various expertise levels, from laypeople to AI specialists.

Further, Crayon integrates ethical considerations into the development and deployment of LLM-based solutions, ensuring transparency about AI explanations’ limitations and actively addressing biases and potential harms.

We have adopted an “Explainable by Design” strategy, employing specific paradigms according to the problem context. These include:

Crayon’s strategic use of these paradigms maximizes LLMs’ inherent controllability and explainability, ensuring effectiveness, transparency, and trustworthiness. This proactive stance integrates explainability into LLM deployment’s core. Implementing these strategies not only enhances LLMs’ explainability and control but also yields considerable business benefits:

Integrating explainability and control in LLMs aligns with ethical AI practices and offers substantial business advantages, including risk mitigation, user trust enhancement, appropriate solution deployment, competitive edge, and regulatory compliance, are all crucial for the sustainable and responsible growth of AI-driven enterprises.

Incorporating explainability and control in large language models (LLMs) aligns with the global shift towards trustworthy AI, emphasizing transparency and fairness. This approach not only builds trust among stakeholders in sectors where clear, justifiable decision-making is crucial, like healthcare and finance, but also addresses the increasing social awareness of technology’s impact.

Explainable AI plays a key role in identifying and correcting biases, ensuring fair and equitable operations as a significant step towards trustworthy AI. This commitment reflects a deep understanding of AI’s societal context to address society’s concerns, aims to comply with what organizations and governments are legislating for, and demonstrates a dedication to responsible innovation and sustainable business practices, essential for maintaining operational legitimacy and social license.

The appetite for AI is high and partners are on the frontline of educating customers about the right ways to think about the value of AI in their businesses.

Having a trusted AI sherpa to guide your own learning journey is essential and that’s where Crayon offers a unique difference to our partners.

Far beyond the cloud solutions distribution and licensing expertise we offer, Crayon partners benefit from nearly a decade worth of frontline, hands-on AI solution development.

Crayon is an Azure OpenAI Global Strategic Partner with two Data and AI Centers of Excellence (COEs) including one based in Singapore. We are the only services company with cloud distribution capabilities across the APAC channel to have over 300 applied AI projects in market, which includes more than 2,500 models running on a proprietary accelerated MLOps framework.

Our work in this field is governed by the Crayon Responsible AI Guidelines (CRAIG). CRAIG grounds our services around sustainability, ethics, trust, robust engineering, and security. It establishes the in-depth policy mechanisms of our genuinely responsible AI organization. Our sales and delivery processes have tight integration of this policy. So too in our governance and support structures, and knowledge dissemination.

This is material for any partner that is using, integrating or implementing AI in their own business or for their customers, and wants absolute confidence that their distributor of choice understands the nuanced perspectives involved.

If you are exploring the future of AI as a practice in your business and want to know more about the Crayon Responsible AI Guidelines (CRAIG), reach out to our Technology Advisory Group.

This post was originally published on Crayon.

On Demand Webinars

Our latest On-Demand webinar provides a practical, execution focused walk through the full Copilot partner journey, from early adoption to advanced scenarios.

Blogs

Catch our comprehensive summary of the highlights from Microsoft Ignite 2025, combined with the resources and links you need to dig into the detail!

Training

In our latest webinar, our in-house Modern Work experts Jye Wong and Ksenia Turner will run you through a practical refresher on Solution Partner Designations; what they are, why they matter and how to get started.

Sales and Marketing

Business leaders don't live in the tools. They live in the outcomes. The metrics they care about most are not always limited to compliance and risk. So how do you connect data protection to the big-ticket objectives, when they're less obvious? Our in-house pre-sales expert, Michael Brooke explains.

Guides and eBooks

As SMBs mature in the Data Protection lifecycle, they need help to optimise spend, reduce the compliance burden and ensure results align to business objectives. The third installment of our Data Protection Playbook series provides practical guidance for partners on how to address emerging pressure and connect ongoing investment to measurable business value.

Training

Copilot Agents: what are they and how do they differ from AI assistants and chatbots? Our in-house Copilot expert Ksenia Turner explains the use cases and service opportunities for partners.

Blogs

Fragmented data protection systems and processes create compliance proof-gaps for SMB customers. Scott Hagenus, Director, Cybersecurity here at Crayon explains.

Guides and eBooks

How can partners help their SMB customers to move from silos of security and continuity to a more cohesive, measurable and insurable data protection framework? The second edition in our Data Protection Playbook series maps out their journey, and yours.

Sales and Marketing

Ever wonder why a pitch has some IT Managers leaning forward, while others glaze over? Michael Brooke, Cybersecurity Pre-Sales Lead offers some insight on how to tune your approach to chime with different technical mindsets.

Vendor Announcements

Copilot for Business has landed at Microsoft Ignite 2025, levelling the GenAI playing field for SMB customers. Learn all about it from our man on the ground, Andreas Bergman.

Press Release

Press Release

Blogs

As cybersecurity and continuity converge in platforms and in practice, partners need new playbooks to address modern Data Protection standards. Our in-house cybersecurity pre-sales lead, Michael Brooke explains why.

Guides and eBooks

What triggers an SMB customer to begin exploring their need for better Data Protection? The first of our four Data Protection Playbooks for partners breaks down how to position and win in the Pre-Adoption and Exploration stage.

Insights

Data Protection priorities are shifting for SMBs. Ramp up your ability to respond with curated insights, articles and resources to help you guide every customer conversation with confidence.

Partner Spotlight

In this Partner Spotlight, Acceltech Managing Director Ivy Tarrobago shares how Crayon’s responsive support enhances client outcomes and business growth.

Whitepapers

Data Protection is a must for all SMBs but how can partners align solution investment with critical business objectives? Our latest paper shows you how.

Press Release

Crayon has been recognised with a huge double win at the CRN Channel Asia awards ceremony.

Insights

All the latest insights, articles and resources on M365 Copilot, curated into one place.

Insights

SMB customers are storing greater volumes of sensitive data in more places than ever. Secure backup and recovery practices are essential to how they protect it.

Partner Spotlight

Bigfish Technology saved AU$20,000 on its annual Microsoft licensing after one call with Crayon and has since built a strong partnership that enabled Bigfish to get access to Crayon’s expertise and vendor ecosystem.

Insights

Insider risk is a subtle and continuous challenge for SMB customers. Turn it into a manageable and quantifiable aspect of their Data Protection strategy.

Blogs

From rethinking backup to governance frameworks and behavioural analytics, what's involved in building a complete Data Protection strategy for SMB customers?

Insights

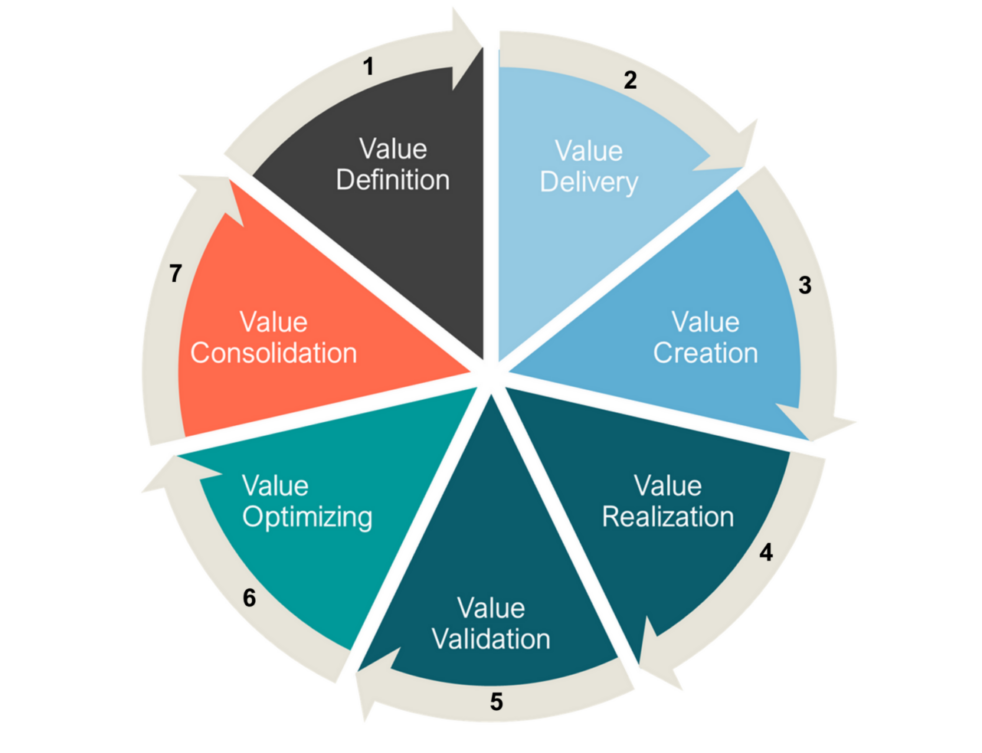

We explore the evolution of Microsoft's channel strategy over the past ten years, and what can be learned by viewing it through a Value Cycle lens.

Guides and eBooks

The Microsoft Fabric Partner Guide curates our recent articles, videos and resources to accelerate Crayon partner learning.

Insights

Support your cybersecurity game plans with our top picks of new and updated risk and resilience resources.

Guides and eBooks

Partners, get your Bond on! Our Cyber Operatives Field Guide breaks down five cybersecurity missions to foil would-be cybercriminals.

Company Announcements

Webinars Series

Press Release

Blogs

Blogs

Connect Data Protection to strategic objectives via this Whitepaper

What are the most critical business objectives and solution adoption priorities for SMBs in our region? Download the latest Forrester study to find out!

Our APAC channel business is now part of a global organisation. That means there is a whole new world of value on offer for our partners. We can help you to tap into all of it.